Welcome to the second chapter of AI As An Investment. I’m thrilled to have you back in this deep dive. To really absorb this, find a quiet spot and get comfortable. A hot cup of coffee or tea works, though a glass of wine is equally fitting. Have a notebook ready for ticker symbols, because this chapter will surface the less-glamorous but absolutely pivotal layer of AI infrastructure: memory and storage. Often outshined by flashy GPUs, these are the hidden workhorses of AI. Let’s explore why AI is frequently memory-bound, not compute-bound, and what that means for investors. I poured extensive research into this (with plenty of data points and examples), so settle in and enjoy.

2.0.1 Companies featured in this article

Memory (HBM/DRAM) – SK hynix (000660.KS), Micron ( MU 0.00%↑ MU, Samsung Electronics (005930.KS)

Storage (NAND Flash & HDD) – Western Digital ( WDC 0.00%↑ ), Seagate Technology ( STX 0.00%↑ ), Samsung Electronics (005930.KS)

2. PILLAR II — MEMORY & STORAGE

The Hidden Infrastructure of Intelligence

2.0.1 Companies featured in this article

Memory (HBM / DRAM)

000660.KS (SK hynix)

MU (Micron)

005930.KS (Samsung Electronics)

Storage (NAND / SSD / HDD ecosystem)

(Category layer discussed throughout: NAND suppliers, SSD vendors, HDD and archival stack)

Packaging & Testing (memory-adjacent chokepoints)

ASE / SPIL / Amkor (as referenced later in the chapter)

Compute-memory interconnect & pooling (emerging layer)

CXL ecosystem (servers, controllers, switch layer, adoption signals)

I’m keeping this list tight on purpose. You already know how I think about this. If the bottleneck moves, the winners list expands. If the bottleneck tightens, the winners list compresses into whoever controls supply.

2.1 Introduction — Memory as the Real Cost of Intelligence

There’s a reflex in markets. People see AI, they think GPU. They see NVIDIA, they stop there. It’s understandable; GPUs are loud. They get the religious followers. Then you spend one week inside real infrastructure numbers and you realize something uncomfortable.

Compute does the math. Memory decides whether the math happens at all.

The market realized it really recently.

Memory is the logistics layer of intelligence. The plumbing, the arteries, the supply chain inside the machine if you want. If you ever visited a factory, you know the feeling: the fancy robot arm is useless if the parts arrive late. Most AI systems today behave exactly like that. Expensive compute waiting, chewing power, producing heat, while the real limiting factor sits somewhere between HBM stacks, DRAM channels, SSD tiers, interconnect fabrics, and the quiet tyranny of latency.

This chapter is about that tyranny. And how to profit from it without romanticizing it.

2.1.1 Fundamental Role of Memory in the AI Economy

Memory infrastructure forms the backbone of all AI systems, fundamentally determining the speed, scale, and efficiency of data processing and model training.

That sentence sounds like textbook writing. It deserves to sound like a headache instead, because that is what memory becomes when you scale.

When a model is small, you can pretend memory is an implementation detail. When a model becomes large, memory becomes the bill.

The Stanford AI Index 2025 framing you provided is the right anchor: memory systems can represent roughly 30 to 40% of total AI training and inference costs. That range matters. 30% means “important line item.” Forty percent means “strategic constraint.” And for some deployments, it gets worse. Not because memory is magically more expensive, but because the system spends time waiting on it, and time is capex amortization, and capex amortization is the real cost of AI.

Models have grown like a disease you can’t treat with optimism. Parameters explode, context windows expand, KV caches swell, and dataset sizes turn into multi-terabyte rivers you try to sip through a straw. Every one of those trends has one common consequence: more data needs to be moved, staged, held, and served at speed.

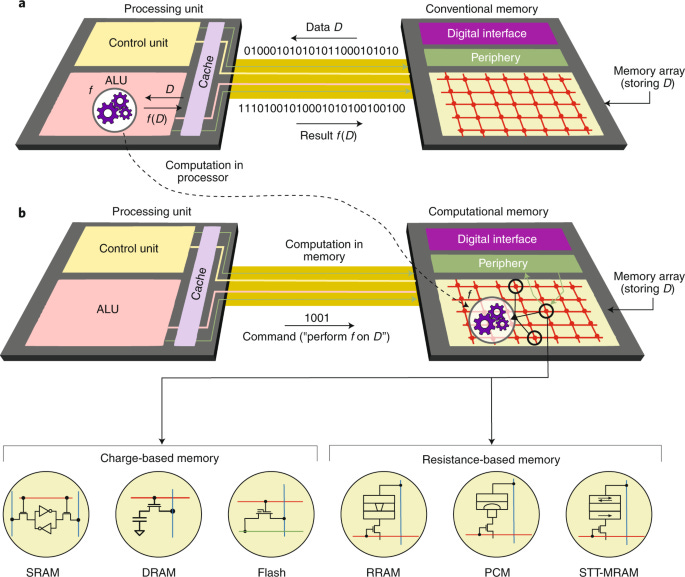

Take the simplest mental picture. A model is weights plus activation flow. Weights live somewhere. They need to be pulled into fast memory. They need to be reused constantly. If weights are not close enough, your compute sits idle. Idle compute is expensive compute. This is the first fundamental role of memory: keeping compute busy.

The second role is subtler. Memory decides what kind of AI is economically possible. If your architecture cannot hold enough active state cheaply, certain applications stay science projects. Real-time agents with long memory. Robotics with heavy sensor fusion. Multimodal systems that keep large buffers alive. Retrieval systems that query huge vector stores with low latency. It’s a memory and storage problem.

So yes, memory is infrastructure. But it also shapes the product frontier.

2.1.2 Why Speed and Data Proximity Condition Performance

Minimizing data transfer latency between compute units and the memory hierarchy.

The phrase “data gravity” is a useful weapon here. Data gravity says: the larger your data pile becomes, the more it pulls compute toward it, because moving the data becomes the most expensive part. At some point the cost of moving bytes dominates the cost of doing math.

This is where beginner investors get tricked. They hear “compute is expensive.” True. But the system-level cost often comes from the silent stuff:

bandwidth gaps between GPU cores and DRAM,

latency gaps between DRAM and NVMe,

bottlenecks in staging data from storage into active memory,

and the scaling tax of trying to keep many nodes coherent.

You can think of the modern AI system as a chain of rooms, each door smaller than the last. Data must pass through each door. GPUs are the furnace at the end. If the doors are narrow, the furnace starves. You can buy more furnaces. It doesn’t fix narrow doors.

This is why HBM became central. Not because it is elegant. Because it is physically close. It sits next to the compute die. It reduces distance. Distance is latency. Latency is idle time. Idle time is wasted capex.

When someone tells you “our GPU is twice as fast,” you should instantly ask: how often is it waiting.

2.1.3 Memory’s Share in Total Cost (30–40%)

Let’s make it concrete.

If memory is 30–40% of total cost, this has two consequences that investors often miss.

First, memory is not a rounding error. If a hyperscaler can reduce memory footprint or memory bandwidth demand per unit of inference, it can cut cost meaningfully. That cost reduction can be reinvested into more capacity, lower pricing, or higher margins. In all three cases, memory economics shape competitive dynamics upstream and downstream.

Second, memory scarcity creates pricing power. If memory is structurally constrained, then whoever supplies it becomes the choke point. Choke points collect rents. Rents show up in gross margins, in negotiating power, in long lead-time contracts, in “allocation” language, in customers begging for supply, our McKinsey-style framing fits: memory costs can reach several million dollars annually for enterprise-scale models. That’s believable even without fetishizing the number. Any large AI deployment has recurring memory spend through:

direct procurement of DRAM / HBM / SSD,

energy costs tied to moving bits,

and the opportunity cost of longer training runs due to memory bottlenecks.

And opportunity cost is real: if training takes 3x longer because the pipeline is memory-bound, you don’t pay 3x more in electricity only. You also pay 3x more in depreciation of the infrastructure you already bought. That is the killer.

2.1.4 Interaction Between Compute, Memory, and Energy

This is where AI becomes industrial, in a way that makes finance people uneasy because it stops behaving like software.

Compute, memory, and energy form a coupled system.

Improve compute, you increase appetite for bandwidth. Improve bandwidth, you increase power draw. Increase power draw, you increase cooling needs. Increase cooling complexity, you constrain physical design. Constrain physical design, you constrain density. Constrain density, you slow deployment.

It’s a loop. A physical loop.

And the APAC region investing heavily in memory manufacturing matters for exactly that reason: memory is a geopolitical capability. Korea and Taiwan sit in the middle of the AI supply chain in a way that makes sovereignty people twitchy. Investors should treat it as risk pricing.

2.2 The Memory Hierarchy — From Cache to Cold Storage

If Pillar I was “power to tokens,” Pillar II is “bytes to tokens.”